language:

- en

- de

- es

- fr

- ja

- ko

- zh

- it

- pt

library_name: diffusers

license: other

license_name: ltx-2-community-license-agreement

license_link: https://github.com/Lightricks/LTX-2/blob/main/LICENSE

pipeline_tag: image-to-video

arxiv: 2601.03233

tags:

- image-to-video

- text-to-video

- video-to-video

- image-text-to-video

- audio-to-video

- text-to-audio

- video-to-audio

- audio-to-audio

- text-to-audio-video

- image-to-audio-video

- image-text-to-audio-video

- ltx-2

- ltx-2-3

- ltx-video

- ltxv

- lightricks

pinned: true

demo: https://app.ltx.studio/ltx-2-playground/i2v

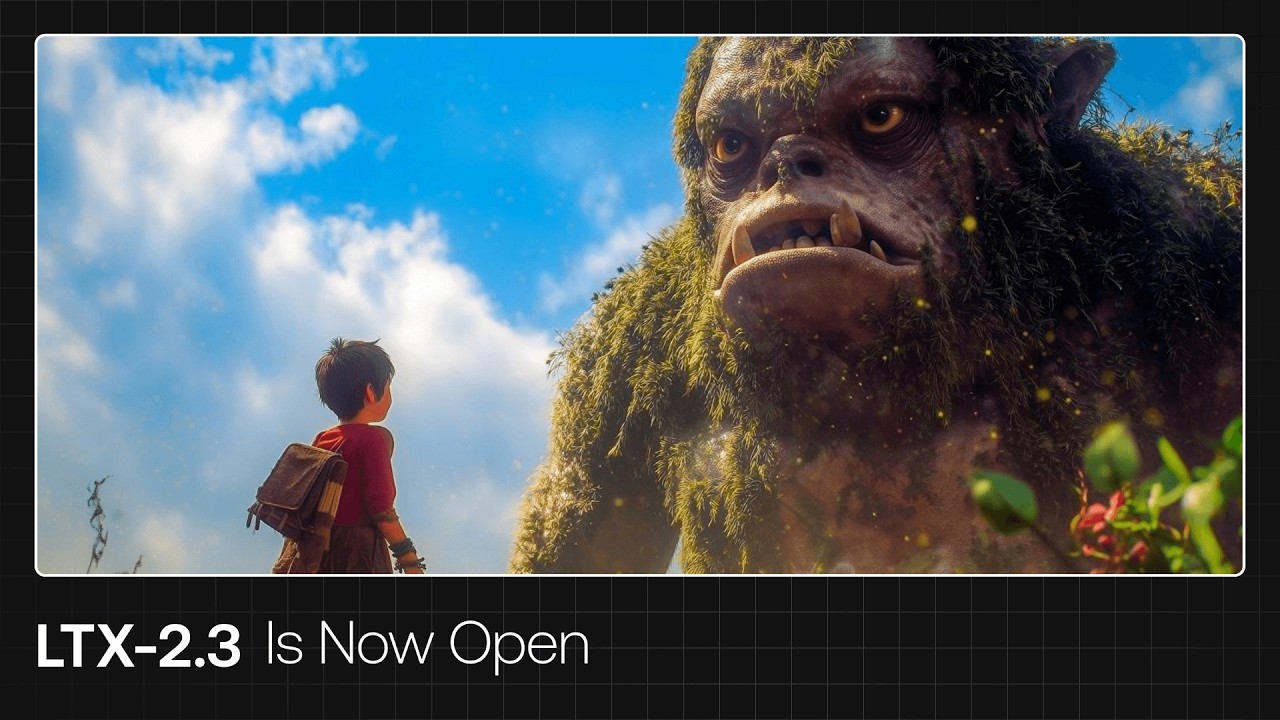

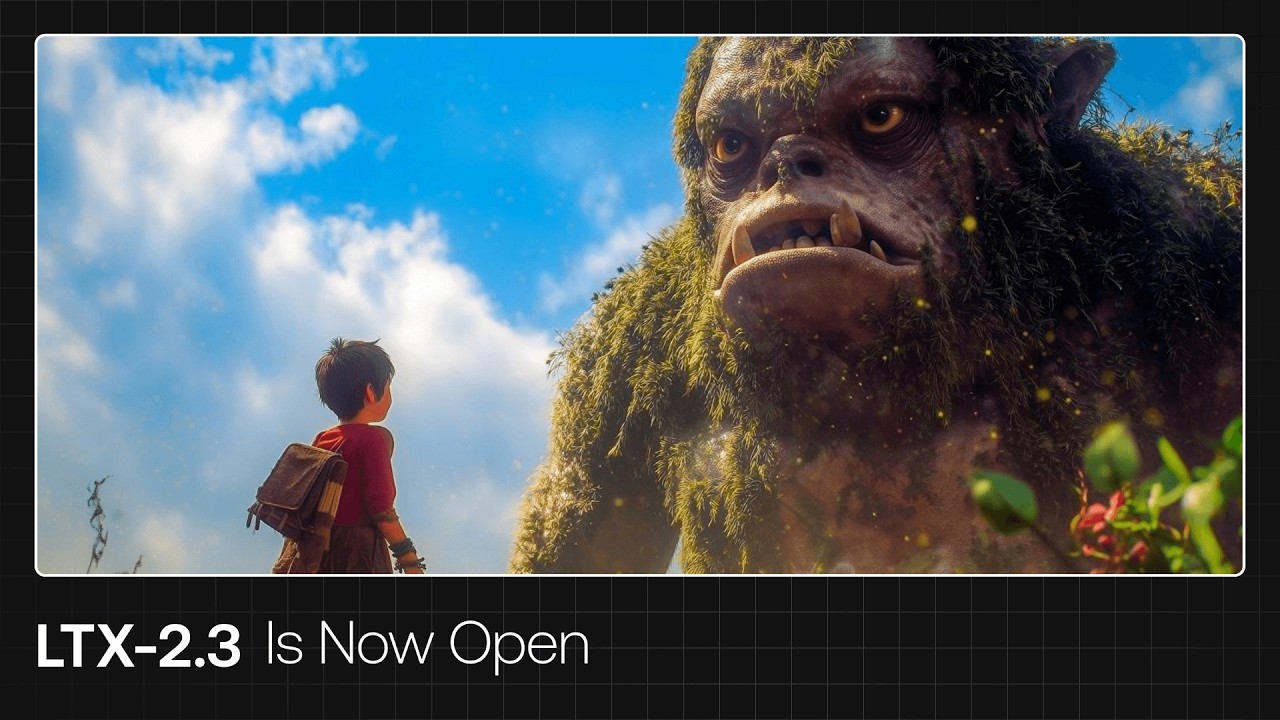

LTX-2.3 FP8 Model Card

This is the FP8 versions of the LTX-2.3 model. All information below is derived from the base model.

This model card focuses on the LTX-2.3 model, which is a significant update to the LTX-2 model with improved audio and visual quality as well as enhanced prompt adherence.

LTX-2 was presented in the paper LTX-2: Efficient Joint Audio-Visual Foundation Model.

💻💻 If you want to dive in right to the code - it is available here. 💾💾

LTX-2.3 is a DiT-based audio-video foundation model designed to generate synchronized video and audio within a single model. It brings together the core building blocks of modern video generation, with open weights and a focus on practical, local execution.

Model Checkpoints

| Name | Notes |

|------------------------------------|--------------------------------------------------------------------------------------------------------------------|

| ltx-2.3-22b-dev-fp8 | The full model, flexible and trainable in bf16 |

| ltx-2.3-22b-distilled-fp8 (coming soon) | The distilled version of the full model, 8 steps, CFG=1 |

Model Details

- Developed by: Lightricks

- Model type: Diffusion-based audio-video foundation model

- Language(s): English

Online demo

LTX-2.3 is accessible right away via the API Playground.

Run locally

Direct use license

You can use the models - full, distilled, upscalers and any derivatives of the models - for purposes under the license.

ComfyUI

We recommend you use the built-in LTXVideo nodes that can be found in the ComfyUI Manager.

For manual installation information, please refer to our documentation site.

PyTorch codebase

The LTX-2 codebase is a monorepo with several packages. From model definition in 'ltx-core' to pipelines in 'ltx-pipelines' and training capabilities in 'ltx-trainer'.

The codebase was tested with Python >=3.12, CUDA version >12.7, and supports PyTorch ~= 2.7.

Installation

git clone https://github.com/Lightricks/LTX-2.git

cd LTX-2

# From the repository root

uv sync

source .venv/bin/activate

Inference

To use our model, please follow the instructions in our ltx-pipelines package.

Diffusers 🧨

LTX-2.3 support in the Diffusers Python library is coming soon!

General tips:

- Width & height settings must be divisible by 32. Frame count must be divisible by 8 + 1.

- In case the resolution or number of frames are not divisible by 32 or 8 + 1, the input should be padded with -1 and then cropped to the desired resolution and number of frames.

- For tips on writing effective prompts, please visit our Prompting guide

Limitations

- This model is not intended or able to provide factual information.

- As a statistical model this checkpoint might amplify existing societal biases.

- The model may fail to generate videos that matches the prompts perfectly.

- Prompt following is heavily influenced by the prompting-style.

- The model may generate content that is inappropriate or offensive.

- When generating audio without speech, the audio may be of lower quality.

Train the model

Currently it is recommended to train the bf16 model. Recipes for training the fp8 model are welcome as community contributions.

Citation

@article{hacohen2025ltx2,

title={LTX-2: Efficient Joint Audio-Visual Foundation Model},

author={HaCohen, Yoav and Brazowski, Benny and Chiprut, Nisan and Bitterman, Yaki and Kvochko, Andrew and Berkowitz, Avishai and Shalem, Daniel and Lifschitz, Daphna and Moshe, Dudu and Porat, Eitan and Richardson, Eitan and Guy Shiran and Itay Chachy and Jonathan Chetboun and Michael Finkelson and Michael Kupchick and Nir Zabari and Nitzan Guetta and Noa Kotler and Ofir Bibi and Ori Gordon and Poriya Panet and Roi Benita and Shahar Armon and Victor Kulikov and Yaron Inger and Yonatan Shiftan and Zeev Melumian and Zeev Farbman},

journal={arXiv preprint arXiv:2601.03233},

year={2025}

}

Tags: diffusers, image-to-video, text-to-video, video-to-video, image-text-to-video, audio-to-video, text-to-audio, video-to-audio, audio-to-audio, text-to-audio-video, image-to-audio-video, image-text-to-audio-video, ltx-2, ltx-2-3, ltx-video, ltxv, lightricks, en, de, es, fr, ja, ko, zh, it, pt, arxiv:2601.03233, license:other, region:us